Prometheus node exporter on Raspberry Pi – How to install

Introduction – Prometheus Node Exporter on Raspberry Pi What does this tutorial cover? In this post we are walking through configuring the Prometheus node exporter on a Raspberry Pi. When done, you will be able to export system metrics to Prometheus. Node exporter has over a thousand data points to export. It covers the basics […]

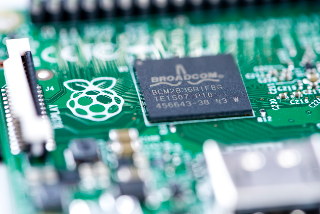

Build a Raspberry Pi image with Packer – packer-builder-arm

Introduction: Building a Raspberry Pi image with Packer Today we are test driving packer-builder-arm, this tool enables you to build a Raspberry Pi image with Packer (in addition to other ARM platforms). Packer-builder-arm is a plugin for Packer. It extends Packer to support ARM platforms including Raspberry Pi. Packer is tool from Hashicorp for automating […]

Raspberry Pi PXE Boot – Network booting a Pi 4 without an SD card

What does this Raspberry Pi PXE Boot tutorial cover? This Raspberry Pi PXE Boot tutorial walks you through netbooting a Raspberry Pi 4 without an SD card. We use another Raspberry Pi 4 with an SD card as the netboot server. Allocate 90-120 minute for completing this tutorial end to end. It can faster if […]